Give every AI tool

your full context

Your knowledge is scattered across tools. Lore brings it together — so Claude Code, ChatGPT, Cursor, and any MCP client can all work with the same understanding.

npm install -g @mishkinf/loreContext that doesn't lose the source

Memory tools store summaries. Lore preserves your original documents and lets any tool cite them.

Without Lore

“Users want faster exports”

With Lore

“In the Jan 15 interview, Sarah said ‘The export takes forever, I’ve lost work twice this week’”

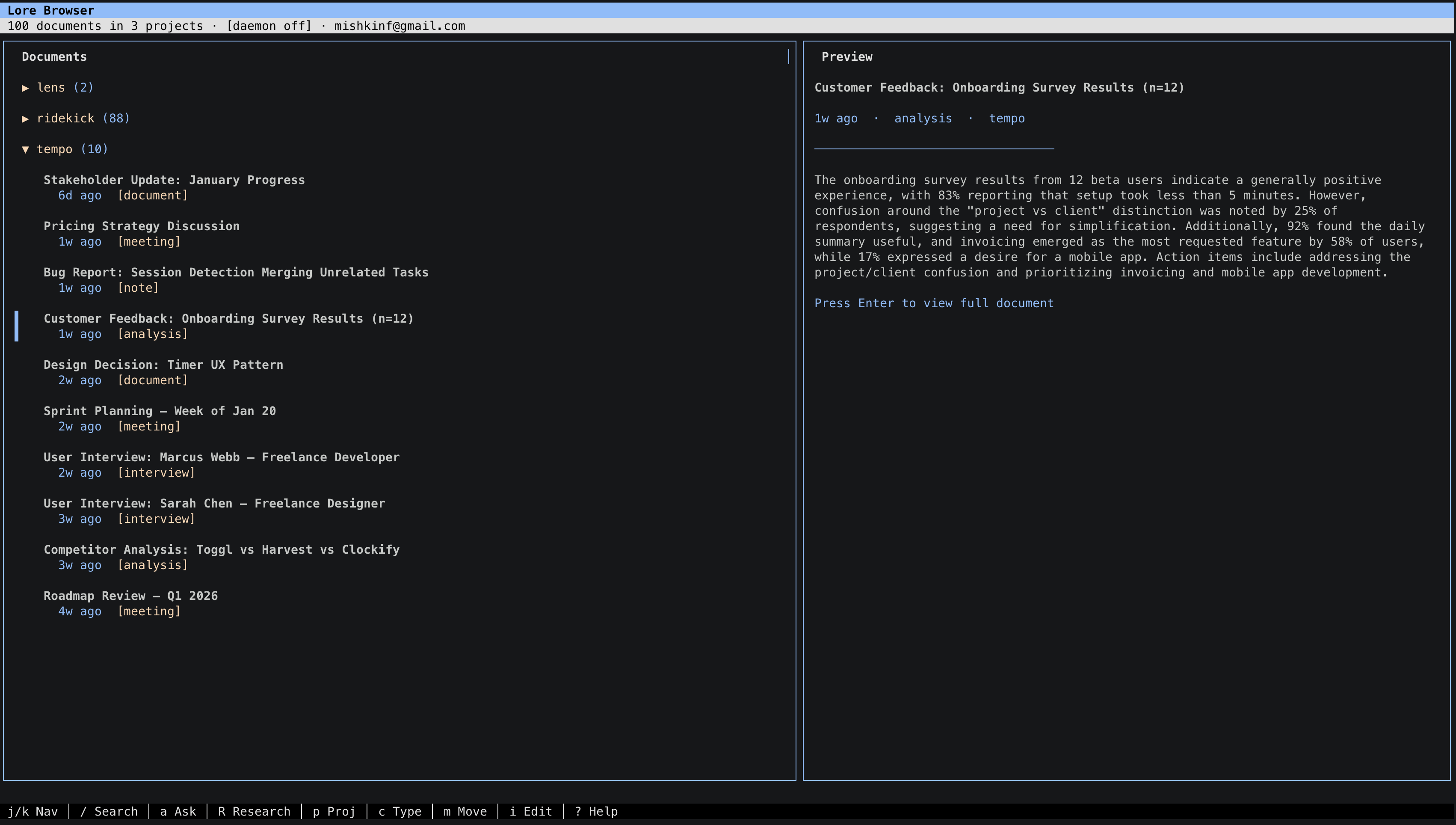

Browse and search your knowledge base from the terminal.

Everything in one place

Hybrid Search

Find by meaning or exact terms.

Citations

Every result links back to the original source.

MCP Tools

9 tools for Claude Desktop, Claude Code, and more.

Multi-Machine Sync

Same knowledge base everywhere. No duplicates.

Agentic Research

AI explores, cross-references, and synthesizes.

Universal Formats

Markdown, PDF, images, CSV, HTML, and more.

Point. Sync. Search.

Up and running in minutes

Requires an OpenAI API key (embeddings) and Anthropic API key (research).

Install

npm install -g @mishkinf/loreSetup

Configure API keys and sign in:

lore setupAdd Sources

Point at a folder of documents:

lore sync addSync & Search

lore sync

lore search "user pain points"

lore research "What should we prioritize?"Use with Claude Desktop & Claude Code

Add Lore as an MCP server. API keys from lore setup are used automatically.

Add to .mcp.json in your project or ~/.claude.json globally:

{

"mcpServers": {

"lore": {

"command": "lore",

"args": ["mcp"]

}

}

}Settings → Developer → Edit Config. Include API keys since Desktop doesn't inherit your shell:

{

"mcpServers": {

"lore": {

"command": "lore",

"args": ["mcp"],

"env": {

"OPENAI_API_KEY": "your-key",

"ANTHROPIC_API_KEY": "your-key"

}

}

}

}Add wherever your client expects MCP server definitions:

{

"command": "lore",

"args": ["mcp"],

"env": {

"OPENAI_API_KEY": "your-key",

"ANTHROPIC_API_KEY": "your-key"

}

}Available MCP Tools

search

Find by meaning or exact terms

get_source

Get full document content

list_sources

Browse by project or type

list_projects

Overview of all projects

retain

Save an insight or decision

ingest

Add a document directly

sync

Sync sources

archive_project

Archive a completed project

research

AI-powered deep research

Command overview

| Command | Description |

|---|---|

lore setup | Guided configuration wizard |

lore login | Sign in with email OTP |

lore sync | Sync all configured sources |

lore sync --dry-run | Preview what would sync |

lore sync add | Add a source directory |

lore sync list | List configured sources |

lore search <query> | Hybrid search |

lore research <query> | AI-powered deep research |

lore browse | Interactive TUI browser |

lore docs list | List all documents |

lore docs get <id> | View a document |

lore projects | List projects |

lore projects archive <name> | Archive a project |

lore mcp | Start MCP server |

Common questions

What's the difference between Lore and a memory system?

Memory systems store processed facts without attribution. Lore preserves original documents so you can cite exactly what was said, by whom, and when.

What does sync cost?

Only new files cost anything to process. Re-syncing existing files is free.

Can multiple people share a knowledge base?

Not yet. Each user's data is isolated. Team sharing is planned.

What file formats are supported?

Markdown, JSONL, JSON, plain text, CSV, HTML, XML, PDF, and images. Claude extracts metadata automatically.

Do I need to set up any infrastructure?

No. Backend is fully hosted. Just install, log in, and bring your own API keys.

How does multi-machine sync work?

Sync the same folders from multiple machines. Lore skips anything already indexed.